In my intense love affair with the Google Cloud Platform, I’ve never felt more inspired to write content and try things out. After starting with a Snowplow Analytics setup guide, and continuing with a Lighthouse audit automation tutorial, I’m going to show you yet another cool thing you can do with GCP.

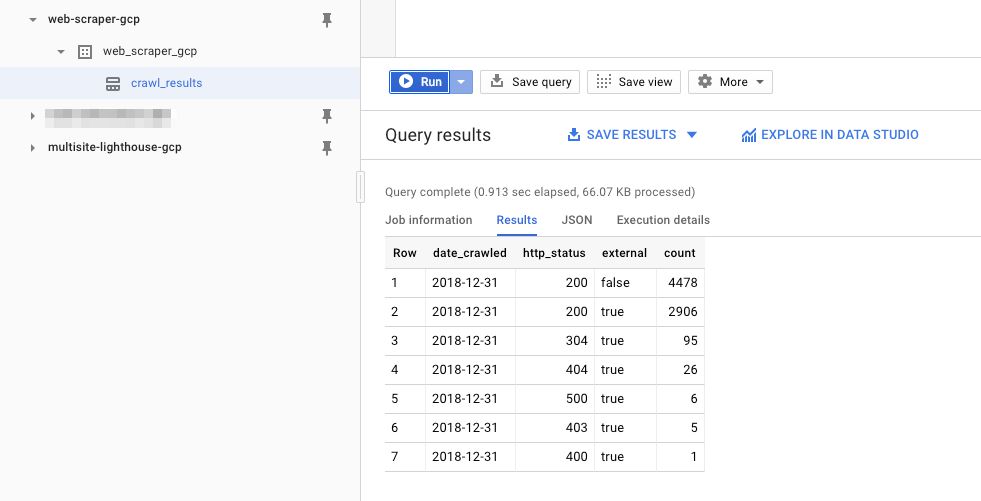

In this guide, I’ll show you how to use an open-source web crawler running in a Google Compute Engine virtual machine (VM) instance to scrape all the internal and external links of a given domain, and write the results into a BigQuery table.