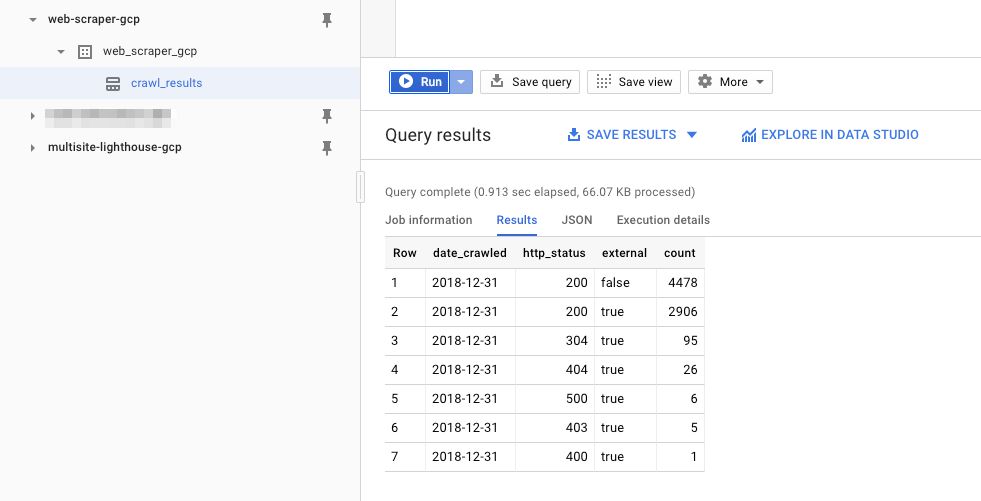

On New Year’s Eve 2018, I published an article which instructed how to scrape pages of a site and write the results into Google BigQuery. I considered it to be a cool way to build your own web scraper, as it utilized the power and scale of the Google Cloud platform combined with the flexibility of a headless crawler built on top of Puppeteer.

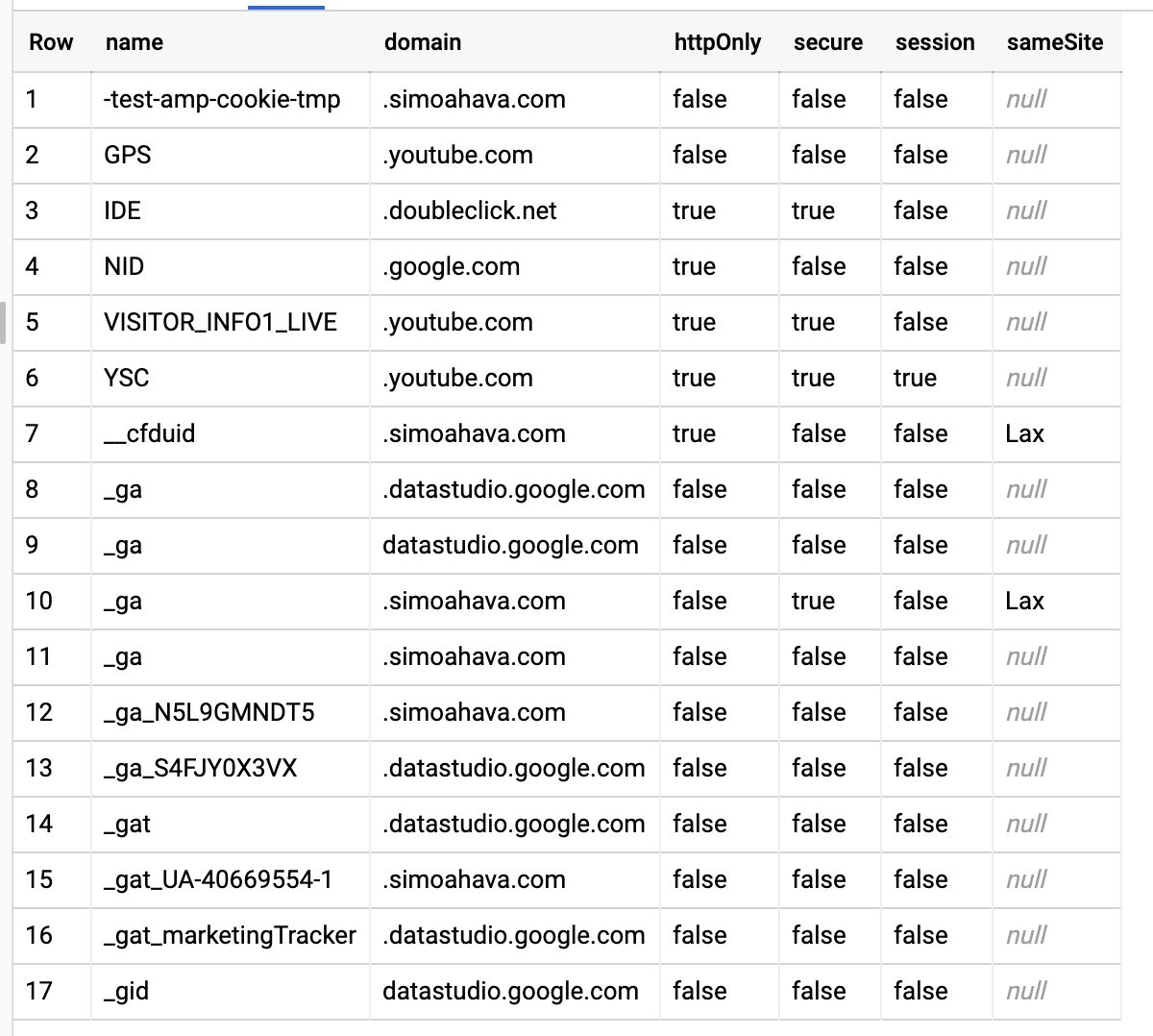

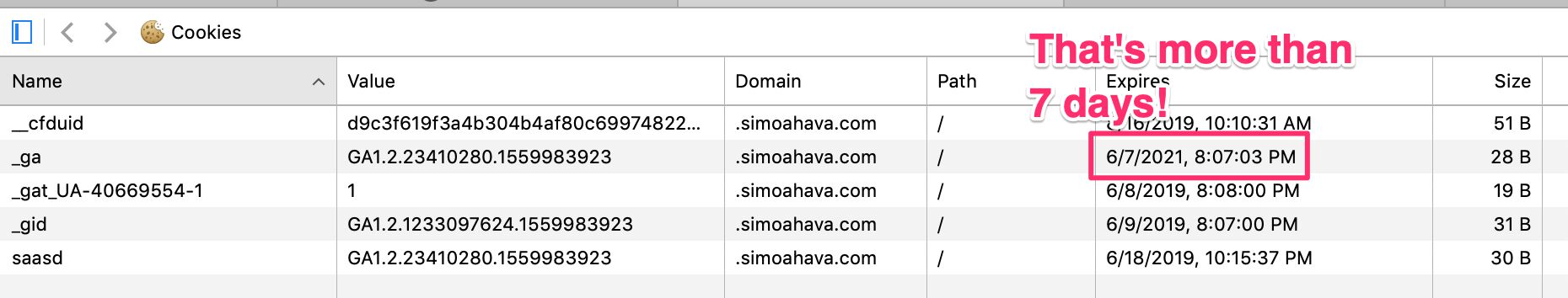

In today’s article, I’m revisiting this solution in order to share with you its latest version, which includes a feature that you might find extremely useful when auditing the cookies that are dropped on your site.